Pick the Right Type of Rubric

There are three main types of rubrics, each with its own strengths and weaknesses. You are likely to use a variety of types to fit the needs of different assignments.

Analytic Rubrics

Analytic Rubrics

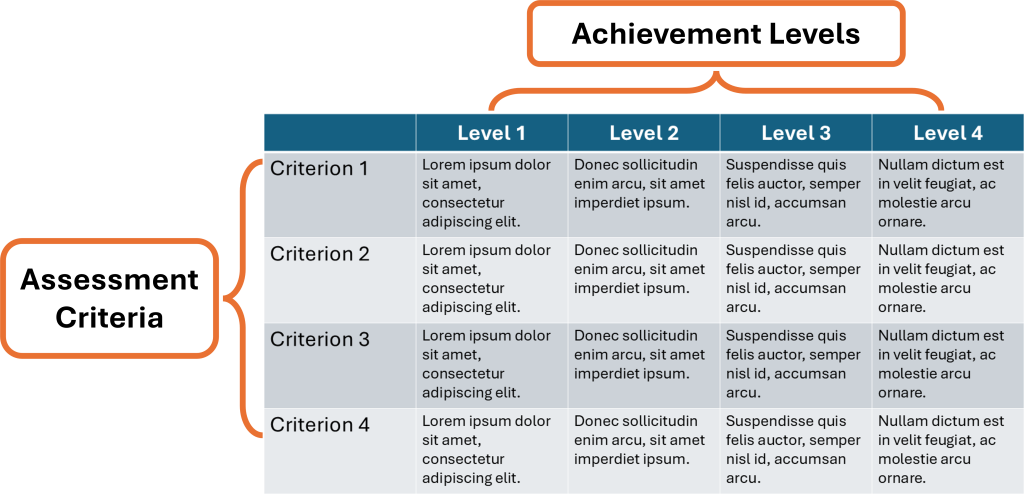

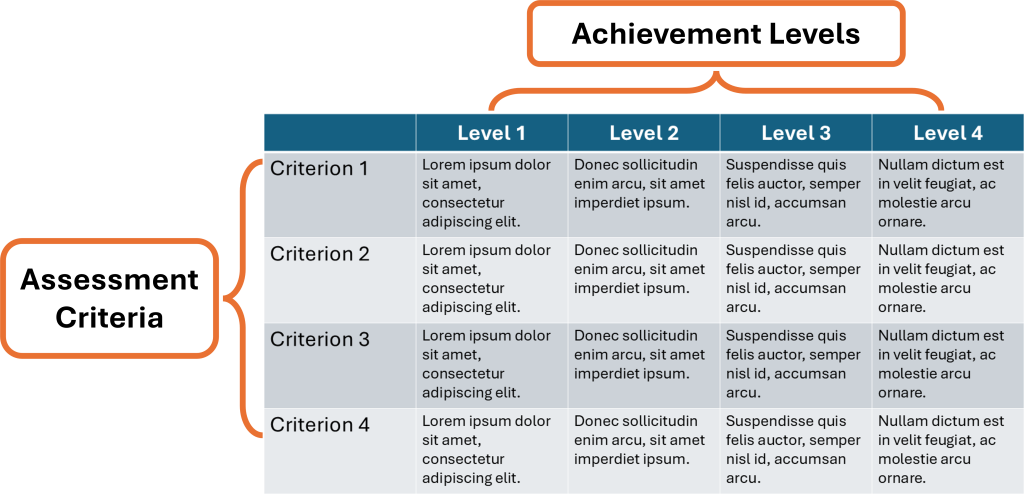

When we think of rubrics, the most common visual is of an analytic rubric, which includes both assessment criteria (usually the rows of the table) and achievement levels (usually the columns of the table).

Analytic rubrics are ideal for assignments that have very straightforward, quantifiable characteristics for criterion levels. It can also be beneficial when uniformity and transparency is a priority, such as with assessments that are shared across multiple sections and instructors.

| Potential Strengths |

Potential Weaknesses |

- Specificity

- Clarity/transparency of expectations

- Efficiency in grading

|

- Time-consuming to develop

- Rigidity/inflexibility

- Overly prescriptive

|

Criteria-Only Rubrics

Criteria-Only Rubrics / Single-Point Rubrics

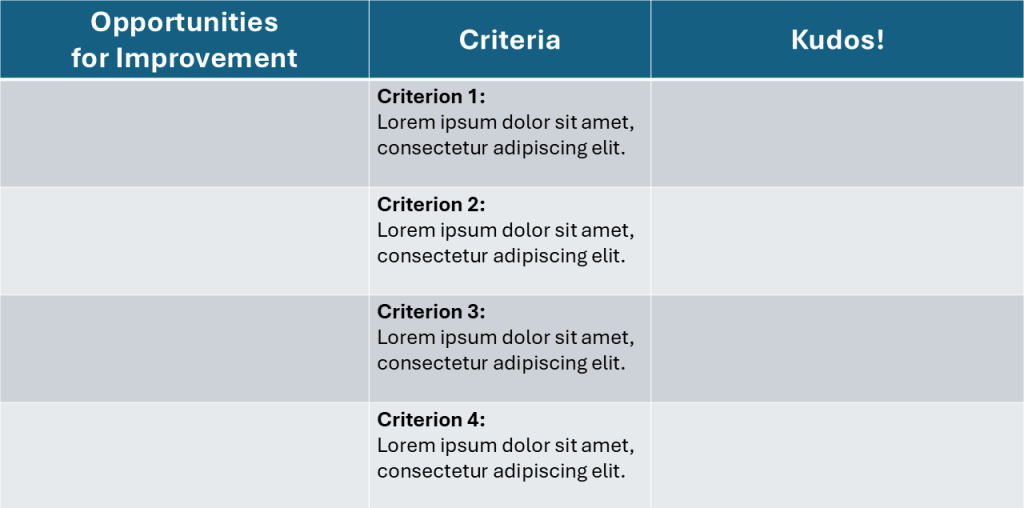

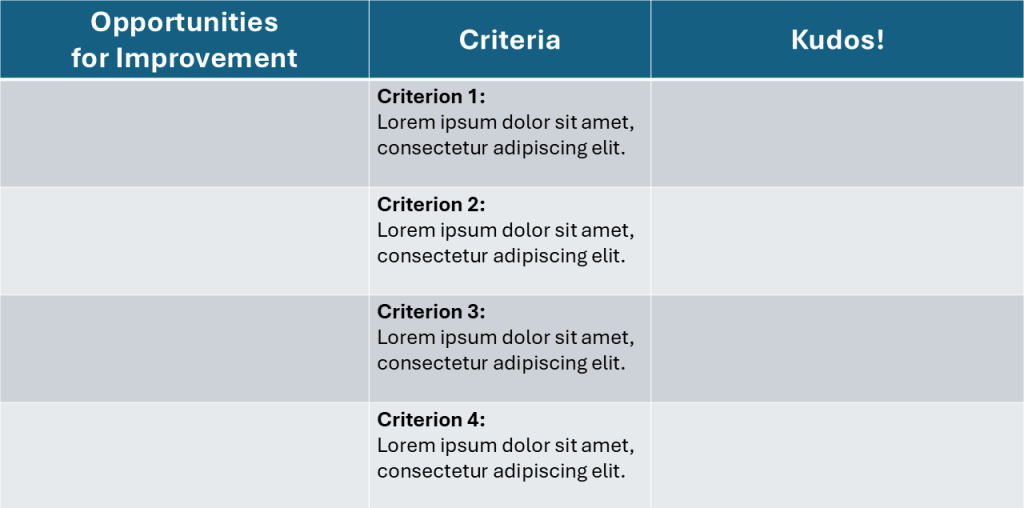

These rubrics only identify assessment criteria and not achievement levels. Essentially, the whole rubric is the highest level description for each criterion from an analytic rubric.

The standard format of a single-point rubric1, provides room for both praise and constructive criticism on the sides of each criterion. These rubrics are great for drafts and highly creative assignments as well as for instructors that are implementing un-grading approaches to assessment.

| Potential Strengths |

Potential Weaknesses |

- Emphasis on formative feedback and the development process

- More efficient to create

|

- Less transparency for students (compared to analytic)

- Less efficient to grade (compared to analytic)

|

Modified Single-Point Rubric

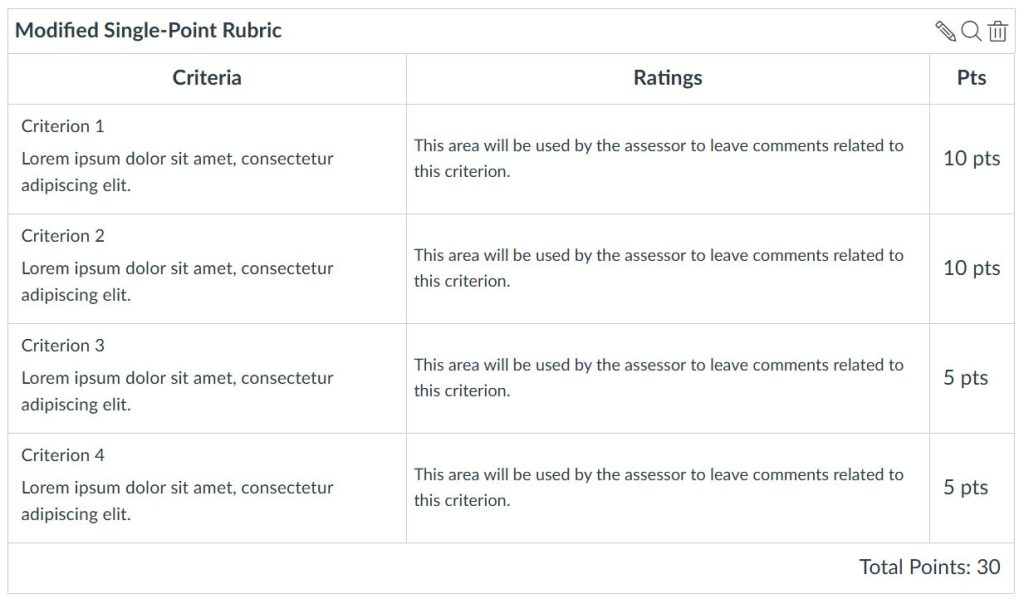

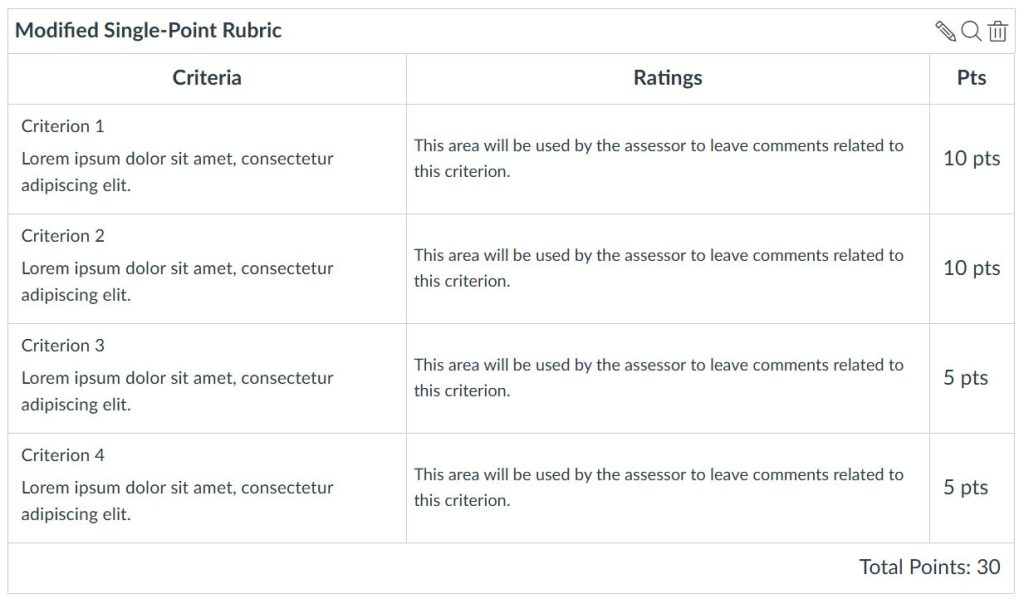

The single-point rubric format cannot be programmed into Canvas, so many instructors use a modified version that has one space for each criterion to include both praise and constructive criticism rather than boxes on either side of the criterion.

This format is also beneficial for a checklist approach to a rubric. Each criterion would represent an element that needs to be included in the submission, ideal for assessing completion-based activities.

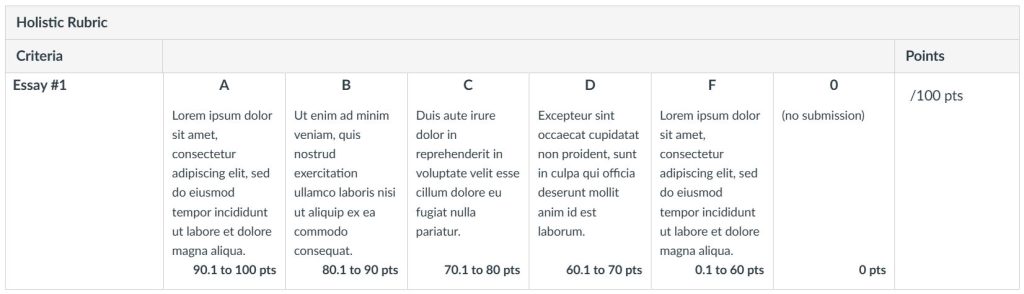

Holistic Rubrics

Holistic Rubrics

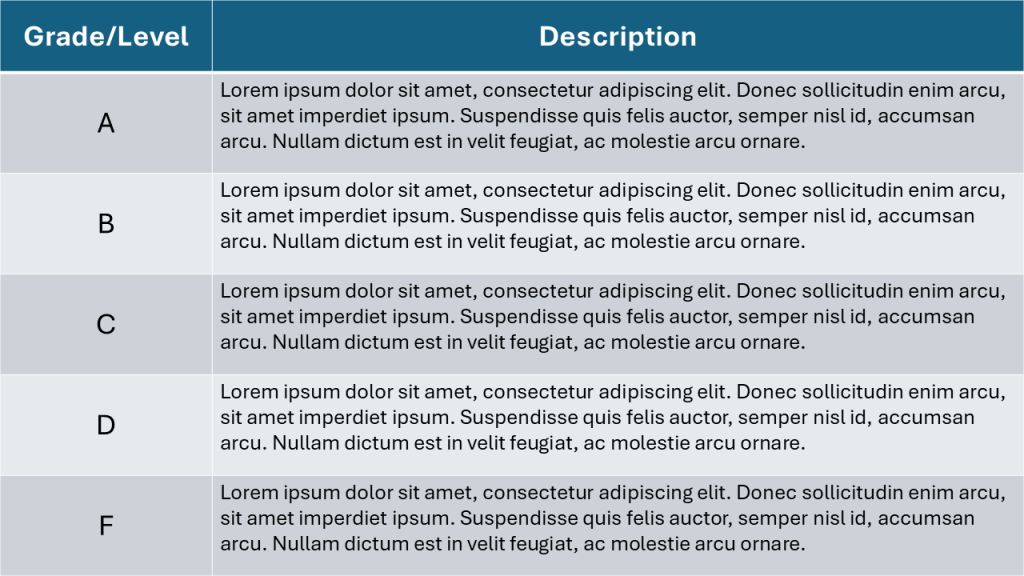

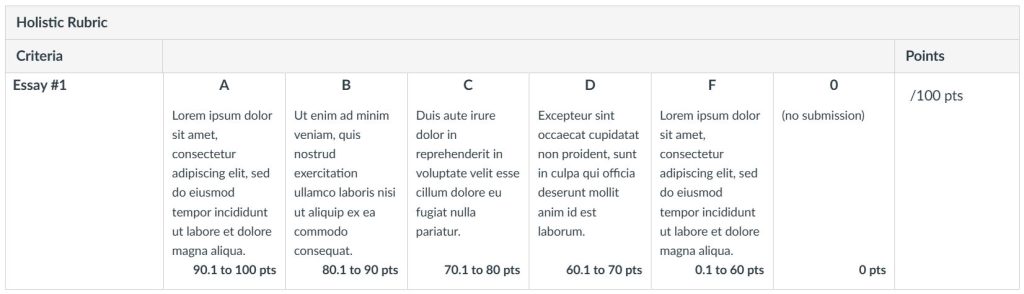

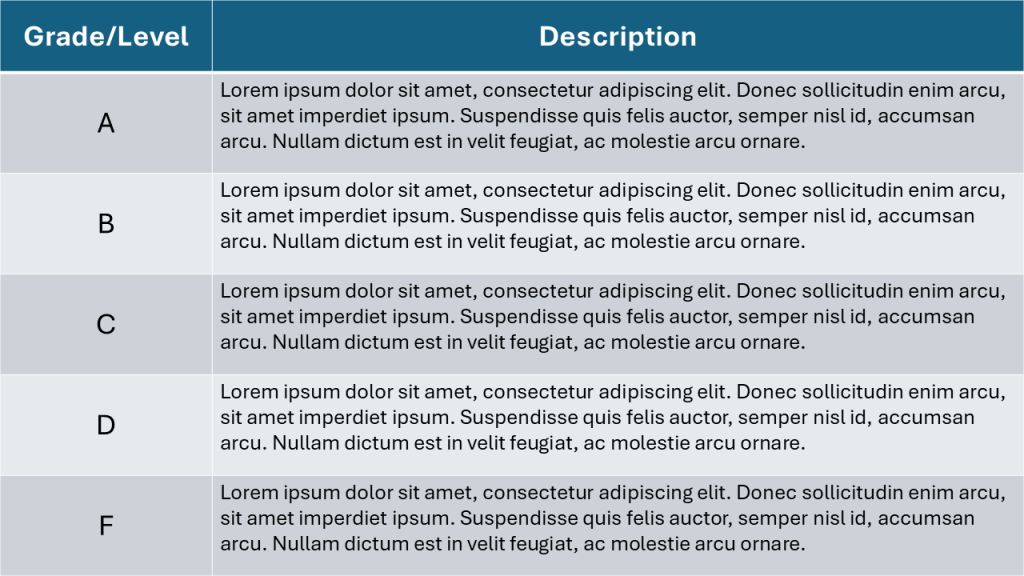

Our last rubric type contains only achievement levels (either a grade or other level label), not assessment criteria. Instead of assessing work by first assessing its components/characteristics, it takes a holistic view of the submission.

Each level of a holistic rubric is accompanied by a description of the characteristics of that level of achievement. Where analytic rubrics parse out those characteristics into criteria (each worth certain points), holistic rubrics don’t assign point value to each characteristic individually.

Holistic rubrics are ideal for simpler, lower-stakes assignments where you want to provide more feedback than just a complete/incomplete status but not as much as for rubrics with assessment criteria.

| Potential Strengths |

Potential Weaknesses |

- Emphasizes the work’s nature as a whole (i.e. more than the sum of its parts)

- Easy/quick to create

- Quick to grade

|

- Less transparency for students

- Lack of nuance

- Inefficient for large and/or complex submissions

|

NOTE: If you wish to use a holistic rubric in Canvas, it would mean using only one “criteria” for the whole assignment.

For any of these approaches, putting your rubric in Canvas can make it easier for students to access and for you to grade:

Align with Learning Objectives

All rubrics need to be aligned with the learning objectives of the corresponding assignments, which helps ensure that grades reflect student learning. When writing criteria and level descriptions, start with what knowledge or skills students are demonstrating.

This is an opportunity for you to double-check that your assignment is aligned with course learning outcomes. You can also consider the Universal Design for Learning (UDL) strategy of separating the ends from the means:

From a UDL perspective, goals and objectives should be attainable by different learners in different ways. In some instances, linking a goal with the means for achievement may be intentional; however, often times we unintentionally embed the means of achievement into a goal, thereby restricting the pathways students can take to meet it. 2

Your learning objectives (and their priorities) should also be reflected in point values. In rubrics that include criteria (not holistic), each criterion may not be worth the same amount of points. Highlight where you want students to focus their energy by distributing the points to place emphasis. For example, “Organization” in a paper or podcast may be worth 3 or 4 times more than “Format/Mechanics.”

Consider Cognitive Load

This aligns with the principle of chunking your content to maximize engagement. To ensure that your rubric is clear and effective, avoid overloading it with too many details — too many assessment criteria and/or too many levels of achievement can make it hard for students to follow. The sweet spot is 4-6 criteria and/or 3-5 levels.

Leverage Language to Increase Transparency

Use Consistent Language

Use Consistent Language

When designing rubrics it’s important to use consistent language (terminology, phrasing) across the entire rubric so students won’t be confused or misunderstand the requirement(s).3

Use Measurable Language for Rating Levels

Use Measurable Language for Rating Levels

Rubrics should focus on the observable characteristics of the submission, e.g. “Cites 3 scholarly sources.” Vague language that is more interpretive and/or subjective, e.g. “Well-supported argument” makes it harder for students to hit the mark. When thinking about the criteria, consider what evidence you’ll need to be sure that students have met the objective.

Let’s consider Smaldino, Lowther, & Russel’s (2008) ABCD model for learning objectives (Instructional Technology and Media for Learning, 9th Ed.). The first three have been addressed in the assignment description, the degree of proficiency is the part that applies to the rubric.

- A: Audience (the learners)

- B: Behavior (the skill that learners must demonstrate)

- C: Condition (the condition under which they’re assessed)

- D: Degree (the level of proficiency or accuracy)

Use Descriptive, Not Evaluative, Language

Use Descriptive, Not Evaluative, Language

All language used in rubrics needs to be descriptive as opposed to evaluative. This approach supports a “growth mindset” and primes students for the iterative process of improving their work over time. Descriptive language also provides clearer expectations (a best practice for creating activities).

This can also apply to the level headings that describe what you expect students to do at each level of development; avoid language that diminishes their efforts. For example, level headings of Exemplary/Advanced, Proficient, and Developing are more supportive and inclusive than Good, Average, and/or Poor.

Evaluate Your Rubric (& Get Feedback)

Here are three ideas (in no particular order):

Get Student Input

You can invite your students into the conversation while drafting the rubric. Coauthoring rubrics with students can be a particularly powerful strategy for developing student agency and creating buy-in for your assignment (Culturally Sustaining Pedagogy [CSP], UDL Consideration 7.1).

You can also get student feedback on the current version of your rubric. Are there any sections that are unclear? Do the descriptions need more detail? Does anything need to be revised? If you review the rubric with them before they start the assignment, you can get feedback and make changes (also good for CSP and UDL).

Try Asking AI for Input

Whether you would like a starting point generated for you OR you would like to get feedback on a draft, you could consult a generative AI tool like ChatGPT. We tried it out ourselves here. We recommend that you provide your specific learning objectives and priorities to get the most helpful results. Be transparent about this use with your students to model good digital citizenship — you can add a note or citation to your rubric or assignment instructions to reference how you used AI.

Test the Rubric with Sample Work

Ideally, you could use the rubric to grade a couple of past submissions to test it. However, if the assignment is new and you do not have any examples, you could ask AI to complete the assignment for you and then test the effectiveness of the rubric on that submission. Does the rubric provide feedback to the student that will help them improve their work? Is the grade an accurate representation of how they’ve met the learning objective(s)?

AI Disclosure: The Instructional Design Dynamic Duo consulted ChatGPT when developing this blog post. We had so many ideas that we were having trouble organizing them into clear best practices, so we wanted to see what ChatGPT would consider to be best practices. We were able to use some of the language provided and reorganized the practices into five.